From Organizing Information to Synthesizing Knowledge

Google was founded in 1998 with the mission “to organize the world’s information and make it universally accessible and useful.”[1] Its founders, Larry Page and Sergey Brin, were among the first to organize web pages into a searchable index using a mathematical algorithm called PageRank, which helped surface the most relevant web pages based on a user’s search query. Generative Artificial Intelligence (“genAI”) represents a quantum shift in computer science, moving beyond simply indexing and organizing information to assimilating and synthesizing the entirety of the world’s knowledge.

Modern chatbots, such as ChatGPT from OpenAI, are powered by Large Language Models (“LLMs”), which are systems built on artificial neural networks, a computational architecture loosely inspired by the human brain that runs on specialized processors. There are many parallels between how an LLM is programmed and how a human being thinks, but the two are very different. Our goal in this series is to demystify neural networks, LLMs, and genAI, and to describe them not as sentient beings portrayed in science fiction but rather as a new class of tools for synthesizing insights.

GenAI is a relatively new branch of Artificial Intelligence (AI), and it brings with it a lot of new terminology that we will try to explain to make it more accessible. We begin by looking below the surface at how neural networks are trained and used. In future articles, we’ll build on these ideas as we explore the new applications of genAI driving today’s multitrillion-dollar datacenter buildout.

The Engine Behind AI: Neural Networks Demystified

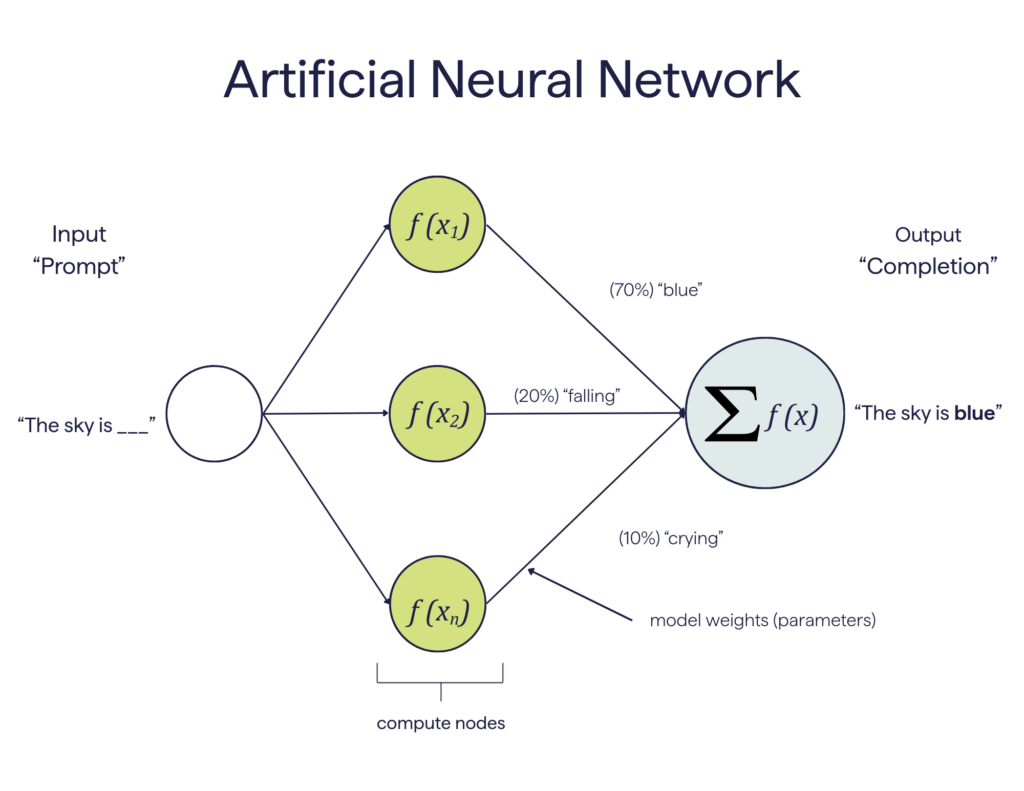

At the highest level, an artificial neural network is a model used for processing language in mathematical form. Being loosely inspired by the human brain, it uses an array of simple computational nodes (“neurons”) connected by adjustable weights (“synapses”). Nodes perform calculations on input data, such as a text prompt, based on content and context, including the frequency and proximity of specific words within a sentence, paragraph, or an entire book. Based on the model’s weights, the LLM selects each word in the response sequentially to generate an output sequence, or “completion”. This is shown schematically below where the model is used to infer the next word in the input phrase “The sky is ___”. Based on its training, the most likely next word to complete the phrase is blue with a 70% probability. The weights are learned during model pre-training, and further refined during post-training, which we’ll discuss later. The completion is “inferred” by the model from its training; hence, this step is referred to as “inference”. In practice, prompts can be very detailed and include entire documents and images for context, which are passed through the model to generate a completion.

Newer model training strategies use “alignment” techniques to guide more accurate responses and sharpen expertise in certain areas. Alignment can also prevent the model from generating harmful content from its vast set of training data, which is an active area of research at the model labs. It’s important to take a moment and recognize that inferring or synthesizing an answer based on context is very different from retrieving links to web pages from a search engine. The answers generated by an LLM come entirely from two things: the statistical patterns learned during training and the context provided in the prompt. That means the specific way models are trained and aligned, as well as the contextual data used during inference, are all key factors that determine a response.

How AI Learns: Pre-Training vs. Post-Training

Today’s leading LLMs use on the order of a trillion model parameters and are trained on a large corpus of data. Much of this data is publicly accessible, such as the continually updated snapshot of the public internet called the “Common Crawl”[2], as well as YouTube videos and other sources. The initial pre-training phase can take months to complete and can consume as much power as a small city to assimilate and set the model parameters for what is essentially a snapshot of the world’s public knowledge. Iliya Sutskever, co-founder and former chief scientist at OpenAI, recently noted[3] that today’s leading models appear to be converging, possibly because they use similar (publicly available) training data. In the future, models will likely be differentiated by specialization, requiring proprietary data used during post-training to align and orient LLMs for specific areas, such as healthcare and education. If pre-training is where an LLM gains general knowledge, post-training is where it gains domain expertise. Techniques such as Supervised Fine-Tuning (SFT) use labeled examples of desired responses, while Reinforcement Learning with Verified Responses (RLVR) teaches the model how to strategize to reach an objective result. These and other post-training techniques will significantly enhance perceived intelligence in certain situations; however, it’s important not to lose sight of the fact that LLMs are just tools for synthesizing knowledge, not sentient beings with human judgment.

Navigating the AI Boom with Discipline

Artificial Intelligence is not a passing trend but a structural shift that will likely redefine industries and markets for decades to come. The success of chatbots, such as ChatGPT, the rapid advances in model performance, and the potential productivity benefits of AI have led to a historic infusion of capital to build out the necessary supporting infrastructure. Historically, booming periods of investment in new technologies have often been followed by busts, and this time may be no different. Focusing on companies with strong balance sheets and wide competitive moats is a key part of our investment strategy, and that discipline will remain critical during AI’s buildout phase. Before long, we expect virtually all companies to embrace genAI to some degree to increase productivity and grow, just as virtually every company ultimately embraced the web. In future articles in this series, we’ll explore new topics and emerging trends in genAI, and, as always, your 1919 team is available to answer any questions.

__________

[1] Page, Larry, and Sergey Brin. “Founders’ IPO Letter.” Google Inc. Form S-1 Registration Statement, U.S. Securities and Exchange Commission, April 29, 2004.

[2] https://grokipedia.com/page/Common_Crawl

[3] Sutskever, Ilya. “We’re moving from the age of scaling to the age of research.” Dwarkesh Podcast published November 25, 2025.

Disclosures

The information provided here is for general informational purposes only and should not be considered an individualized recommendation or personalized investment advice. No part of this material may be reproduced in any form, or referred to in any other publication, without the express written permission of 1919 Investment Counsel, LLC (“1919”). This material contains statements of opinion and belief. Any views expressed herein are those of 1919 as of the date indicated, are based on information available to 1919 as of such date, and are subject to change, without notice, based on market and other conditions. There is no guarantee that the trends discussed herein will continue, or that forward-looking statements and forecasts will materialize. This material has not been reviewed or endorsed by regulatory agencies. Third party information contained herein has been obtained from sources believed to be reliable, but not guaranteed.

1919 Investment Counsel, LLC is a registered investment advisor with the U.S. Securities and Exchange Commission. 1919 Investment Counsel, LLC, a subsidiary of Stifel Financial Corp., is a trademark in the United States. 1919 Investment Counsel, LLC, One South Street, Suite 2500, Baltimore, MD 21202. ©2025, 1919 Investment Counsel, LLC. MM-00002240

Published: January 2026